Inspired by animal brains — extensive network of interconnected neurons — artificial neural networks (ANN), came and mostly went over the decades. Unlike a biological brain where any neuron can connect to any other neuron within a certain physical distance however, ANNs have discrete layers, connections, and directions of data propagation.

Andrew Ng's breakthrough work at Google was to take neural networks and make them huge, increasing the layers and the neutrons, and then run massive amounts of data through the system to train it. In Ng's case some 10 million videos were fed to the layers of neural networks. Fast forward to today, image recognition by machines trained via deep learning in some scenarios already outperform humans, ranging from cats to identifying indicators for cancer in blood and tumors in MRI scans. Google’s AlphaGo learned the game, and trained for its Go match — it tuned its own neural network — by playing against itself over and over and over. Thanks to Ng’s seminal work and progress in the field, we believe the future will be promising and fun.

Here at Wetware Technology, AMIND evolved into an early version of Azita, a vertical learning machine, for Habit oriented system control. Vertical machine learning applications take a business process in a specific industry and automate it in ways never previously thought possible. They leverage technical capabilities including but not limited to computer vision, natural language processing, audio transcription, and pattern recognition across large data sets to fundamentally transform an industry’s value chain.

Visual SLAM algorithms are able to simultaneously build 3D maps of the world while tracking the location and orientation of the camera (hand-held or head-mounted for AR or mounted on a robot). SLAM algorithms are complementary to ConvNets and Deep Learning: SLAM focuses on geometric problems and Deep Learning is the master of perception (recognition) problems. If you want a robot to go towards your refrigerator without hitting a wall, use SLAM. If you want the robot to identify the items inside your fridge, use ConvNets.

While self-driving cars are one of the most important applications of SLAM, according to Andrew Davison, one of the workshop organizers, SLAM for Autonomous Vehicles deserves its own research track (and as we'll see, none of the workshop presenters talked about self-driving cars). For many years to come it will make sense to continue studying SLAM from a research perspective, independent of any single Holy-Grail application. While there are just too many system-level details and tricks involved with autonomous vehicles, research-grade SLAM systems require very little more than a webcam, knowledge of algorithms, and elbow grease. As a research topic, Visual SLAM is much friendlier to thousands of early-stage PhD students who’ll first need years of in-lab experience with SLAM before even starting to think about expensive robotic platforms such as self-driving cars.

We build true automation, by adopting user behaviour instead a remote controller, and by building smart-home products and services to seamlessly fit in with the way real people live. While every smart home is a smart building, not every smart building is a smart home. Enterprise, commercial, industrial and residential buildings of all shapes and sizes -- including offices, skyscrapers, apartment buildings, and multi-tenant offices and residences -- are deploying IoT technologies to improve building efficiency, reduce energy costs and environmental impact, and ensure security, as well as improve occupant satisfaction.

Smart homes may allow the elderly to stay in their comfortable home environments instead of expensive and limited healthcare facilities. Healthcare personnel can also keep track of the overall health condition of the elderly in real-time and provide feedback and support from distant facilities.

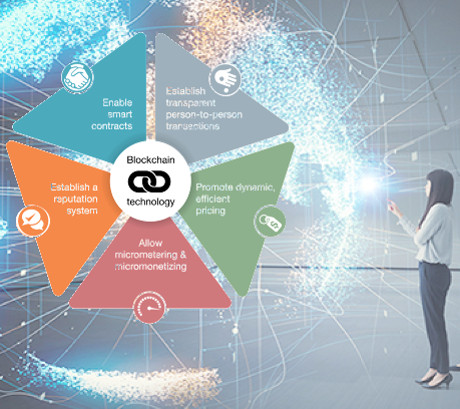

We look forward to revolutionise the logistics sector utilising Blockchain with Deep Learning. We envision a secure platform for actors to share and exchange information concerning their goods and products, making the predication and scheduling more adaptive to the dynamic market.

An application of our knowhow was also found in the traditional classroom. In the future, teachers shall not be limited by physical space or location; they’ll have the ability—through remote access technology and online screen sharing options—to teach from anywhere. Our dClassroom brought to the traditional classroom innovative technology to enable the creation of interactive and engaging tutorial presentations. Teachers or tutorial creators can combine interactive holographic projection, Air-Gesture, and 3D image reproduction to creatively engage the audience for more effective material comprehension and lasting knowledge retention.

A growing number of motorcycle riders are looking for good technology on seamless and efficient communication within a group, our algorithms on neural based learning shows some promise in addressing them. One of the benefits of the learning is that it can help improve mesh networks, which can minimize the power of connectivity problems.

Air quality has severe consequences for both human health and the environment. Monitoring on various components detected in our vicinity , like CO2, Smokes, Alcohol etc are getting important and necessary as part of the smart city infrastructure. Together with various sensors, our cloud based smart system, has been developed with a leading filter-material company, to collect and to train up our model to provide various analyses of filter ageing prediction, user habit oriented conditioning, to allow a cost effective air purifying for office area, hospital, hotel rooms.

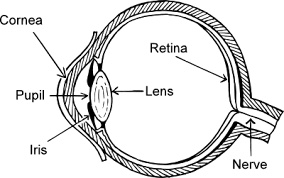

Azita recently created a new subset for illness prediction on medication, where fundus is our current target. We have developed eye-piece to provide a more user-friendly cloud-connected equipment for users (currently childs are our initial target), a cloud based middleware interface, our Azita model training system, and a mobile client to present the received fundus image and its predicted results and advice.Our algorithm learns on ophthalmic images in the prediction of demographic parameters, body composition factors, and diseases of the cardiovascular, hematological, neurodegenerative, metabolic, renal, and hepatobiliary systems. Three main imaging modalities are included—retinal fundus photographs, optical coherence tomography and external ophthalmic images.

Our cities are getting smarter, sound classification for the environment has become a new scope for life improvement factors. Also classifying environmental sounds such as glass breaking, helicopter, baby crying and many more can aid in surveillance systems as well as criminal investigations. We have started a new model project to capture and classify different sound samples from the environment, and convert them down into some meaningful elements for application. We hope the model can open up novel ways for certain business applications and security systems.

In today's stressful environment, User's emotion information represents his/her current emotional state and can be used in a variety of applications, such as cultural content services that recommend music according to user emotional states and user emotion monitoring. We are evaluating a solution to capture speech information that was classified based on emotions and emotion information that was recognized through a collaborative filtering technique used to predict user emotional preferences and recommend content that matched user emotions in a specific application.

Food and perfume are two simple pleasures in life that invoke two senses: taste and smell. As much as both are linked with personal preferences and memories, the AI revolution has found a way to make an impersonal mark in both spaces. We are working with a partnered fragrance company to develop how our machine learning can clone our smell to scent.used to predict user emotional preferences and recommend content that matched user emotions in a specific application.

A Recently developed model on road safety focuses on the processing of real-time images to predict various possible scenarios, like unsafe driving, car stealing, and prediction of unexpected acceleration or deceleration of the vehicle.

Waste resulting from pollution affects our living quality. We have developed an ecosystem for recycling business and a compact recycling bin for households. Our AI model assists the smart sorting, shredding, and linked with waste logistics backend for collection. We are targeting to provide an effective operation and cost model via intelligence in waste management systems for our smart cities.

With tons of our effort on Voice and Visual development, our new Cloud based Azita OS is about to move to field testing, if you are interested in being the early selected tester, stay tuned...

Our aim is to provide effective and user-friendly technology to improve everyday life which are also on the bleeding edge.

Around the world, countless farmers are turning to the Internet of Things (IoT) to help optimise agricultural processes and to prepare for the future. From irrigating blueberries in Chile to staving off crop disease in India, IoT is helping farmers make huge advancements in water management, fertigation, livestock safety and maturity monitoring, crop communication and aerial crop monitoring. We look forward to create compelling IoT innovations with Azita, 5G, and robotics intelligence.

Share with us your vision for the future. Let us work together to create a better world for all of us!